SREcon EMEA 2018 conference notes

One of the three SREcons worldwide, this was a long and intense conference with a three-day multitrack format and a correspondingly voluminous collection of notes. The crowd felt different from North American one: a lot more “large company” folks (heavy presence from Google, Microsoft, Facebook, and others), a lot more smokers than there would be on this side of the pond, and somewhat more conservative technology choices (the last point is similar to what I felt at DevOpsDays TLV last year as well).

I would cluster the talks I attended at this SRECon into five major themes, three of them with a strong presence at this event specifically (sustainable SRE; ethics and laws; and growing SRE) and two recurring themes that are a staple of any DevOps/SRE-oriented conference (“how we built it” and best practices). In each of the three unique themes, there were at least two talks that extended each other or addressed two different facets of the same problem, and it was interesting to compare and contrast different presenters’ approach to a similar topic. This kind of comparison is harder to find at a single-track or one-day conference, simply because of much less podium time available, and is a strong point of SRECon and the efforts of its organizers.

The first theme, sustainable SRE, is about making sure an SRE team (but, by extension, any DevOps-style team with a pager) is long-term sustainable and does not buckle under the manual operational load and by repeatedly getting woken up in the middle of the night. The complementary talks are Damon Edwards’ notes on running a SRE team and Jaime Woo’s call to make sure the responders themselves, not just the systems, are taken care of after incidents.

To help manage the workload, Josh Deprez talks about retiring (not deprecating) systems; Vanessa Yiu shares lessons learned dealing with dark debt; and Effie Mouzeli contains and manages existing technical debt as a new manager on a team.

The second theme, ethics and laws, combines theory and practice with a general ethics discussion in the software field by Theo Schlossnagle followed by practical tips on organizing and effecting change from Liz Fong-Jones and Emily Gorcenski. New legal developments in GDPR by Simon McGarr round out the theme.

The third theme, growing SREs, is important because the knowledge of this discipline is not well taught in schools. Instead, people become SREs by learning and growing, from software engineers or other fields. Fatema Boxwala starts this theme with Ops internships. Kate Taggart continues, one career step ahead, by telling us junior engineers “are features, not bugs”. Concluding the theme, Dawn Parzych lists skills good SREs should aspire to, pointing newbies and seniors alike towards self-improvement.

The “how we built it” track covers:

- full system architecture (Bloomgarden, Scherer/Knott)

- replacing a database technology in-place (Hartmann/Schlossnagle)

- kernel upgrades (Kadbet)

- Kubernetes (Kurtti)

- Service interconnect in Golang (Erhardt)

The best practices track addresses:

- pen-and-paper incident games/exercises (Barry), recommended

- documentation (Blackwell)

- planning for end-of-phase stage of software systems (Ish-Shalom)

- borrowing ideas from other fields, medicine in this case (Turner)

- scheduling and bug tracking (Pennarun)

- code reviews (de Carvalho)

Within the talks themselves, a common refrain has been “we built X before Y existed but now we would just use Y”, underscoring how many of the cloud computing primitives and commonly used systems have matured and became mainstream at solving problems that required custom expensive development just a few short years ago.

The remainder of notes are roughly and opinionately sorted by their level of interest to me. Video and audio of the talks has been posted and is accessible from the program page.

Talks

Clearing the Way for SRE in the Enterprise—Damon Edwards

Damon’s talk had SRE in the title, but I thought large parts of the talk were applicable to the problem of trying to coordinate work across multiple teams in general, and touched on important balancing questions of consistent organizational processes on one hand and the agile manifesto of team-driven process adjustment and autonomy on the other.

SRE teams in particular have to deal with opposing forces of “going faster” and transparency (a DevOps goal; opening up) versus stability, security, and compliance requirements (locking things down).

Changing job titles from sysadmins to SREs doesn’t really do anything to help. Teaching specific skills to individual people also does not change the equation that much. What does make a difference? Quote from Stephen Thorne “Principles of SRE” at London DevOps Enterprise Summit:

- SREs need SLOs with consequences for (not) meeting them

- SREs must have the time to improve the state of things (limiting toil)

- SREs can self regulate their workload

Collaboration with other teams (often needed to fix SLO issues) is impeded by silos: people working with different contexts from each other (backlogs, tools, reporting chains, information). That creates disconnects and managers focused on protecting team capacity against external tasks, working on internal backlogs. Shared responsibility is difficult to impossible in a siloed workplace; SREs can’t keep up with demand or protect their capacity to make improvements. Shared responsibility is also a struggle in cultures of low trust (see below).

Ticket queues and systems are a common way to connect between mismatched silos, but these are a terrible way to manage work: long cycle time; higher risk; overhead to create and track tickets; lower quality and motivation. In the language of Lean, queues break apart value streams.

It’s better to get rid of silos entirely. Cross-functional teams are one option; but shared responsibility is more important (clear lines of responsibility and consequences) in the Google model vs. the cross-functional model.

SREs can face excessive toil (defined as manual, tactical, linearly-scaling work). I liked the “increases with scale” vs. “enables scaling” difference between toil and engineering. When toil ramps up, the team has no ability to improve business and no ability to reduce toil. The fix is “simple”: tracking toil and setting toil limits.

The ability to self-regulate is closely related to trust. In low-trust environments, ideas and solutions are close to the problem but impactful decisions are made some degrees away, and context degrades with every degree of separation. People at the edge know what to fix but lack tasking, priorities, decision control. Engineers aren’t trusted to design their own work. To make better decisions faster, push the ability to decide close to the problem. Fowler: “People operate best when they can choose how they want to work”.

Low trust + approval requirements give management an illusion of control. Out of people who are on the list to approve something, remove…

- info radiators (“I need to know”)

- CYAs (“We need to follow the process”)

- people too removed to judge

and then how many are left? (same process with approval tickets)

SRE skillset is worth $$$. Stay out of their way (minimal queues, including ticket queues); observe-orient-decide-act in tight loops.

Tickets should only be used for true problems / issues / exceptions / enhancement ideas, and necessary approvals (regulatory, etc.), not as general purpose work management system (“everything I do has to pass through the ticket queue”).

Automation work can reduce toil in multiple fields:

access restrictions: avoid “I could fix it, if only I could get to it”.

abstracting visibility away from shell prompt: instead of everybody ssh’ing and running diagnostic commands, collect information and metrics and centralize that visiblity with a link that can be freely shared.

replacing written runbooks/wikis with automation, making it impossible to do the wrong things. This reduces the chance of someone using an outdated runbook, following a previously correct but now outdated process, and making a mistake.

embedding security and compliance into “operations as a service” automation

What about ITIL? Agile+DevOps+SRE have self-regulation and shared responsibility features that undermine the command and control nature of ITIL. “Standard change” is still an implied approval model.

See also:

- Slides

- All 3 of the SRE principles require leadership buy-in

- Ops resolve tickets and are idle when the ticket queue is empty; optimal state for Ops is a stable queue. SRE, when their queue is empty, improve the future; the SRE optimal ticket queue state is 50% idle. If SRE are not given time to make tomorrow better than today, they become an expensive Ops team. (I do not remember the source of this. My notes refer to Ashley Poole’s tweetstream of Stephen Thorne’s “SRE team lifecycles” talk on Day 3.)

Your System Has Recovered from an Incident, but Have Your Developers?—Jaime Woo

Everybody has a postmortem process for a major incident, but almost nobody has a process to follow up on the people involved in it. Out of 40 engineers straw-polled, 40% said they were stressed or very stressed following an incident, and all kinds of metrics (mood, ability to fall asleep…) for them suffered significantly. Jaime surveyed various other professions, as diverse as doctors vs. Olympic athletes, and shared their lessons on handling stress.

After a medical error, doctors experience similar levels of stress; 82% felt peer support and counselling would help, but many do not ask about it. For SRE, almost half of engineers polled said coworkers never reached out to see how they’re doing post-incident.

Some tips given to comedians who are bombing a set:

- afterwards, contextualize on a higher level (“still killing it 8 out of 10 times”)

- what went wrong and what you’d fix for next time

- self-kindness: being understanding and accepting towards self during bad times, instead of being self-critical or highly judgmental

- how to mentally return to a good place

How, indeed? More polls: 50% said engage in a hobby or something fun. 30% spent time with people they care about. Other options: talk with someone, sleep, exercise, mindfulness (at this point, Jaime walked the talk and did a mindfulness break on-stage).

A few more things that can be done to improve people’s response to an incident:

- introduce meaningful breaks

- choose more productive times of day (can we stabilize the situation until the morning and tackle it then?)

- discuss access needs explicitly (for example, someone with a hearing impairment could say “I have an issue hearing on the right side, so I’d prefer to sit here in the war room, and try to speak to me from the left”).

Ethics in Computing—Theo Schlossnagle

This talk was the last one of the day in its track, but that notwithstanding there were amazingly few people in the audience for it, perhaps 10% of the hall capacity. Given the political climate and numerous recent ethical events (some examples given in the talk: Volkswagen emissions scandal; Uber Greyball; Strava’s global heatmap), that was quite surprising to me.

The talk started with introduction to ethics as concepts of right and wrong, and the possible lenses to view ethical problems through (virtues, duties and rules, consequences). Theo touched on changes – ethical questions and approaches change because the human society changes: globalization, increasing scientific understanding, growing and waning of supernatural beliefs. Various fields where ethics are applied were discussed: clergy, medical, business, professional.

Like security, ethics and ethical concerns should be everyone’s responsibility and on everyone’s mind. Some easy questions to ask: Is this going to help people? Disenfranchise people? Hurt one group and advance the other?

The talk concluded with a list of practical steps:

- You are not alone

- Ethics are a spectrum. There are no hard lines

- Keep records of every single thing

- Build a dispassionate defense

- Work the system

- You can leave

The Nth Region Project: An Open Retrospective—Andrew Bloomgarden

“If you don’t get effective at software and system migrations, you’ll end up languishing in technical debt” - Will Larson

While Andrew spoke about a complex project to enable New Relic to expand to new regions easily, the themes are easily generalized to any complex infrastructure project. The general suggestions and lessons made this talk particularly valuable.

The scale of New Relic is 600 million events/minute, 50 engineering teams. Everything runs in a single region. They do disaster recovery exercises, so they knew how to build new regions, but the process was extremely painful. Team was facing typical scaling problems: many-service env, multi language, service discovery, secret management, container orchestration. Needed to automate.

How to go about automating a manual process? For example, obtaining database credentials for a service: file a ticket, DB team does and shares creds, add them to your service configuration, deploy. That’s not very easy to switch from manual to automated without changing the entire process around it. A better approach: programmatically declare you need db access, something happens, you deploy the service (the “something happens” can be a manual process in the beginning, but then the database team can iterate on automating the process without touching the external interfaces).

Which high level interfaces were needed for region migration?

- Service discovery:

- declare your dependencies

- actual locations injected as env vars, credentials included

- static analysis of dependencies → automated bringup sequencing

- containerize all the things:

- Stateless services in Mesos (started using Mesos in 2017)

- Cassandra in Docker since ‘15

- Relational DBs in Docker since ‘17

- CoreOS, not CentOS:

- for running containers only, simplified configuration

- built in configuration system, Ignition

- Terraform, with custom providers as necessary

What was not done? Did not attempt Kubernetes, given time constraints. Did not tackle load balancers.

Overall project plan: discovery, fan out, test, release. Design was validated using a subproject that tests it thoroughly. Did 3 different buildouts total - repeated integration exerciess. When team does the work but it’s not validated, issues crop up weeks later, but team doesn’t have bandwidth to fix it straight away - blocking the integration. “team thought they were done but then they weren’t”.

Various problems encountered on the way:

- Prioritization: project was not prioritized and then had to ramp up very quickly. Team was caught unprepared with docs, core tooling, philosophy to help people make decisions.

- Work that was not known in advance received pushback as scope creep / moving the goalposts.

- Their oncall/question answerer is internally known as a “hero” on a team. Since heroes rotate, they did not have the context necessary to do the work in the optimal way.

Communicating changes: internal blogging culture at New Relic is strong; but keeping up with all available information is hard. Engineers do not know what they are supposed to be reading. All of the communication methods have drawbacks: blog posts and emails don’t get read; townhalls are optional; tools don’t get used. Centralized docs proved to be more useful than blog posts, but that was discovered too late.

Local maximums: engineering teams that own a bunch of tooling, the tooling works well for them, and they do not want to migrate because there’s always a transition pain and potentially misfit. Team specific tooling → transition pain → hopefully, more benefit from future tooling. The benefit is to the organization overall, but individual teams may not see it that way. Have empathy for teams stuck in a local maximum, communicate, find out how they react - don’t make assumptions. Improving standard/shared solutions to be able to offer clear benefits over existing tooling helps.

SRE Theory vs. Practice: A Song of Ice and TireFire—Corey Quinn, John Looney

“DevOps are developers pretending to be SREs”

Corey and John lightened up the mood with their brilliant and heavily sarcastic take on the SRE culture and common fallacies of startups. I hope they realize that given a large enough audience, the probability someone will retweet the hottest quotes and forget the /s approaches 1.

The second half of the talk discussed maturity models and offered plenty of practical advice on various topics: capacity planning, operations, and so on. The goal of maturity models, as described, is not to get to the top level – but to decide what is most important to work on first given where the organization currently is.

Have You Tried Turning It Off (and Not On Again)?—Josh Deprez

Google is well known for having turned off popular services in the past. Josh walked us through best practices of killing a service.

Why shut down systems at all? Turning off legacy systems is a good way to reduce toil and oncall load. Killing legacy, hopefully, improves both reliability and long term velocity of the team. Deprecation is not turning things off, it is merely telling people to stop using something. The hard work is in turning things off: restricting access, capacity, killing systems. SREs are often involved in that because usually they have the keys (automation, procedures) for a controlled demolition.

Why is the work hard? Curse of deprecated systems: can’t turn off, people still use it. A perception of a new system being “not ready” (meaning “doesn’t have enough users yet”) for migration. Teams could see legacy services as better supported. New systems might not faithfully replicate quirks of previous systems (Hyrum’s law: enough users → all observable behaviors are depended on by somebody, regardless of docs.)

Planning a shutdown: the shortest possible lead time for infrastructure change mandates is one planning cycle (3 months for Google) - migration work needs to be scheduled, announcements need to be repeated, and so on.

Messaging about the shut-down needs to be repeated a lot across multiple avenues. [ACTION REQUIRED] in the subject line; second line: “this is the first(second,last) announcement of this change.” As for the audience, individual / non-specific list of teams (bystanders) / broad announcement list (noise floor) are not the best options. Better would be service-specific team lists; leads; oncalls. Best would be recording contact emails from the allow-only list of users of newly introduced systems - this becomes the contact list for turning things off.

A short time after someone migrates to the replacement system, remove their ACL to access the legacy system. All users gone - system can be retired. As this happens, in preparation for turnoff, gradually reduce capacity - fewer users need less resources, and rollback/recovery becomes easier. If there is one user left… perhaps just transfer the service to their ownership?

Turn off alerting before shutting things off! After a system is removed, a way to “soften the transition” could be to write a stub service serving redirects or 410 Gone for endpoints that are not coming back. Less toil, fewer problems if this is turned off… in fact, do turn the redirects/errors off frequently to avoid people becoming dependent on the new behavior.

Other cleanups once a system has been retired:

- docs

- code (don’t want people to spin up their own versions)

- related TODOs in other code

- infrastructure configuration as code

- Load balancers, DNS, other infrastructure resources

- container images or wipe disks of physical systems (someone could restart it up and start serving…)

Organizing For Your Ethical Principles—Liz Fong-Jones and Emily Gorcenski

This talk was not about ethics, but about action. Ethical codes and trying to fix ML bias are not enough, too little and too late. Being ethical is not the same as following a code of ethics–it’s possible to follow a law and be immoral. Some examples of recently built systems that actively harm people: (US) ICE software change that always recommends “detain”. Suspected link (a statistically significant correlation) between certain Facebook communities and violence against migrants in Germany. What can tech people do to change that?

A few polls. About 5% of the audience had to work on ethically questionable projects. About 30% experienced or saw someone experience harassment.

Some causes for action:

- working conditions. “how many people have done unpaid on-call?”

- do products we design discriminate? (poor accessibility; machine learning bias)

- ecosystem effects, contractors & gig economy, gentrification, tech lobbying…

How to effect action?

- St Paul principles: diversity of tactics; separation of space and time; keep debates internal; oppose state actions and don’t assist LE against activists - some of these are similar to tech incident management principles.

- Companies that have “do good” clauses and encourage debate - use these tools. Both ACM and IEEE have codes of ethics that can be used to support individual decisions.

- It’s easier to make change with early involvement. Before shipping. Fast iteration and feedback. Listening to coworkers, customers, diverse networks.

- Building networks and trust, using a variety of methods or media.

- Listening (vs. fixing), emotional-rational content, using safe methods.

- Identifying and cultivating relations with decision makers.

- Effective persuasion and communication.

How to make sure efforts are sustainable and safe?

- Each individual crisis is toil; need to develop a scalable framework. (#techwontbuildit)

- whistleblower protections are generally weak; a result could be a loss of the job.

- Having plans (runbooks) - what is your backup plan? a plan for when a coworker is affected? is there a collective plan? If you are an executive or manager - is your company ready to handle an ethical escalation internally? with a client? with a supplier?

- The cost of a change on an individual will be less the more people participate.

- Avoiding burnout - it’s a long haul, progress can take years. It’s okay to take a step back.

If all else fails…

- employee petitions; 5% are significant.

- media engagement, but generates a lot of resistance in return.

- complaints to regulator, director, shareholders.

- strike or quit. Engineers are expensive to replace, and if there is a mass action it could be impossible.

Ethics are an integral part of a job. Ethics crises are process failures, and there is a need for continuous ethics processes.

Data Protection Update and Tales from the Introduction of the GDPR—Simon McGarr

“People were scared about [GDPR] fines, but the fines are not interesting. The history of tort law shows civil actions are the most significant issue.”

Now that GDPR is in effect and everybody have updated their privacy policies, what’s next? GDPR applies to everyone in a European Union (EU) state or processing EU data, and also if bound to EU law due to international agreements, which are commonly entered into as part of trade negotiations and such. Some companies are trying to waive GDPR in terms and conditions for people outside of EU, but it’s futile.

We should expect several GDPR-related events before XMas: EU-US Privacy Shield likely going away; first GDPR-related lawsuits reach EU national coursts; first actions from people covered by GDPR but residing outside EU showing up in Irish courts. Ireland doesn’t have class actions, but they do allow putting thousands/millions of people in a single court action.

Consent is a basis for lawful processing, but it is becoming a lot more complex that just clicking “OK”. By definition, all privacy-related problems start with a user saying “I didn’t know you were doing that with my data”. If your consent is too complicated to understand, you do not have consent, even if someone ticked the box.

How does Article 22 apply to many companies’ most cherished products? “right [to] not be subject to a decision based solely on automated processing… which produces legal effects… or similarly significanly affects [the user]” can describe a lot of advanced algorithms.

Similarly, Article 35 requires data protection impact assesments for great new ideas. Steer people to the Data Protection Officer (DPO) if they don’t have an assessment. See also the guidelines on DPOs: not everyone can be a DPO; many management positions and also technical positions may be excluded.

Max: GDPR continues being an important topic with relatively little coverage. See also a session from earlier this year at ShmooCon.

Managing Misfortune for Best Results—Kieran Barry

Kieran described Google’s implementation of a virtual “Failure Friday” scenario: giving an engineer a “dealing with an outage” experience without involving real systems and tools. This is a recommended practice in the SRE book and has a number of names: “wheel of misfortune”, “walk the plank”, “manage the outage RPG”.

The facilitator describes the scenario. The victim says what actions would he do, and the facilitator explains what the results of the action are. There’s an assigned scribe. The victim is typically the next new on-call. The usual length of the exercise is one hour, with 30-40 minutes allocated to the scenario and followed by a debrief.

During the exercise, there is typically no collaboration between the victim and the rest of the team, but team members can join up at facilitator’s request if there isn’t much progress. The facilitator may elicit better context by separating between the person who decides what to do and the person clicking the mouse/keyboard, if real tools and systems are used. The facilitator can also break the 4th wall by involving the rest of the team, e.g. other ways to do X, one answer per team member.

This practice has benefits in sharing troubleshooting ways, acquiring knowledge of systems and fluency, getting to on-call faster, and increasing tolerance for on-call stress. The goal is to have a high value training experience, with stress load calibrated to the engineer, while maintaining safety and meaning and involving the whole team. The goals align with values of effective teams: psychological safety, dependability, structure/clarity, and meaningful work. Dealing with elevated stress is explicitly a goal; however, knowing everything is not a goal, as otherwise the value is limited (nothing new has been learned). If, given these goals, people still tend to avoid the sessions, there is probably a failure of safety or meaning in play.

The scenarios should be solvable; these are not exercises in the style of Kobayashi Maru. They can be based on the data captured during previous incidents, and could be reused across teams: different teams will have different habits and incident management protocols.

A highlight of the talk was a live demo with a volunteer from the audience, given a scenario from the Google infrastructure and with a bit of context/help by another “team member” (Google employee). There was an objection to using the term “victim” to describe the engineer playing the on-call role.

What Medicine Can Teach Us about Being On-Call—Daniel Turner

Medicine has been around for a while, and is constantly refining and improving processes. There is a lot we could learn from them, and compare their practices and processes with ours.

For a doctor, a 12 hour shift equals 60 pages. “Don’t hesitate to escalate” (Rapid Response Team): anyone can page, no negative repercussions; decreased ICU deaths by 12%.

Checklists for a procedure, to reduce self-inflicted incidents: infection rates dropped 11 to 0%. Reduce self-inflicted incidents: right command, wrong server; skipping crucial steps in routine activities. But checklists do not help with complicated problems.

Kits of materials to handle a procedure also include preparations for uncommon cases - better to have an uncommonly used item and not need it, than to not have it when the need arises.

A decision to stall is a valid way to delay investigation to a better time: sometimes waiting until the rest of the team wakes up is fine. Can we temporarily throw cheap resources (more instances) at the probem?

Formalized oncall handoff process, IPASS:

- illness severity

- summary (statement, recent history, plan)

- action list

- situation awareness (what’s going on generally, what’s going to happen)

- synthesis: receiver of the information recaps, questions, restates key facts and action items.

Why do doctors always ask for everything to be repeated? Doctors are individually responsible for their actions, so they recheck facts and catch mistakes / misinterpretations in what they knew or were explained before.

Escalation chains are formalized: nurse - intern - senior resident - attending physician - department chair - chief medical officer. How bad do the things have to be for the SRE on-call to page a C-level exec?

Post-call days: the medical profession acknowledges that rest is needed after on-call. It also pays for on call: 20% of median neurosurgeon wages come from on-call pay, because many refused to be on call for less. On-call is a great way to learn, but also a great way to burn out, and people will leave/take pay cuts to avoid oncall.

SRE for Mobile Applications—Samuel Littley

Samuel talked about several differences and issues encountered while trying to apply SRE principles to mobile. At Google, there’s only one SRE team dedicated to supporting mobile applications.

SLI/SLO differences: the client side fails a lot (out of memory, connectivity issues, and so on). Also, old and very old versions continue being used, but only new versions get reliability improvements. This means that interpreting error budgets is harder. To help, filter out unimportant failures from reporting, and allow small regressions in SLIs while continuing to fix issues.

In feature control, flags are used heavily to implement rollouts that allow rollbacks. Rollouts are randomized per flag to test different feature combinations, but this can lead to a metrics cardinality explosion based on features enabled. Flags are not without issues (leaking features in code, enabling too late if rollout is done post-announcement) but on mobile flags are the primary control surface.

A few other mobile tips:

- server side fixes are the best–some clients will never get the fix if it’s on the client side

- sample time to react to a change can be within hours

- canary all the things

- caching configuration is risky: if there’s a bug that crashes on cached data before pulling new configuration, you may never be able to fix this. There’s an example of that in the talk, with permanently increased error rates.

- other apps/libraries can break yours: Play services update broke some Google apps.

The 7 Deadly Sins of Documentation—Chastity Blackwell

“Avoid jargon; as organizations grow, simplify more. Don’t make all the new people and other teams in the company learn your jargon.”

93% of open soure projects, and most workspaces, think their docs are insufficient. The root cause is usually deprioritization: documentation is seen as “extra”. But there are plenty of reasons to put docs front and center: onboarding; institutional knowledge; incident response; inclusiveness; business continuity. If reasons for past decisions are not recorded, it becomes harder to revisit them.

Another expression of reduced priority could be leaving the docs to new or junior team members. Instead, have junior people (who have less context and are more reliant on docs) review pages written by other engineers. The culture should make it feel safe to ask “stupid questions”.

Separate runbooks from technical documentation:

Runbooks:

- Immediate instructions

- Address specific questions

- Inverted pyramid writing style

- “Example commands should be benign” - a sample command for, let’s say, resetting a test database should not show how to do it on the production DB.

- Have explanations, but really short ones

- Test the runbook

Technical Docs:

- Thorough system description

- Why are things the way they are (reasons for decisions, context)

- Specific examples

- Know the audience for each document

- No autogenerated docs (from code), they generally are not good at answering the requirements set out by the previous 4 bullets

- No sending people off to rabbitholes

- Test that people understand what you intend to say

All documentation should be kept in one place–multiple places cause confusion. The two mandatory features for a doc repository are change tracking and search. Tickets or chat logs are not good documentation sources. Neither are code comments (generally do not say why, getting context is difficult, require tech knowledge).

Overgrowth: keeping out of date pages around. Having obsolete information “just in case” interferes with search and slows down incident response. Incorrect docs hurt new team members the most.

Video documentation can be easier to present, but demands more attention. Generally not searchable, less accessible, and much harder to edit/revise. Tips: video is best for infrequently updated stuff consumed end-to-end, like onboarding. Supplement video with timestamps for content and subtitles; consider transcripts.

Sample style guide: state the authoritative source (e.g. the wiki); write the title for discoverability; describe standard doc types (guides, howtos, runbooks); supply general writing tips; have consistent place and formatting for links to other resources.

Circonus: Design (Failures) Case Study—Heinrich Hartmann, Theo Schlossnagle

“Fixing operability or fixing correctness? Either is hard to solve fully but operability pays off sooner.”

The talk covered some choices and technology transitions of the Circonus monitoring platform. The twin challenges for Circonus were being a monitoring platform (for a telemetry system, the flow of data never stops - there are no “low load” times to do maintenance, upgrades, etc.) and being early to the market, when components we see as standard today (Kafka) did not exist yet. Their homegrown message queue system is optimized for the specific use case, but today they would “just use Kafka”.

In addition to a message queue, Circonus also built their own database (similar to Cassandra) and a custom storage format for data. The reason for ditching Postgres was high operational effort to deal with node failure. At scale, they want to be able to recover, rebuild, resize data stores with no manual intervention.

How did Circonus switch the engine mid-flight? Running the new system in parallel with the old system for many months, with very easy switches to and from, allowed faster and more confident development. Better visibility, even within a process: instrumenting each individual thread in a process in a trace, treating each process the same as a distributed system.

Other quick take-aways:

- Retaining performance data of systems the only way to know they are doing better

- When a system state is kept over days and weeks, observability in prod is crucial

- Circonus have underestimated development effort to build operations tooling

- On a dashboard, place service metrics on the top row

What Makes a Good SRE: Findings from the SRE Survey—Dawn Parzych

This is the survey in question. 416 responses were counted, but only 1% South America and 1% Africa. We are a very diverse industry: second largest category (32%) is “other”.

When hiring, don’t look for all of the skills; a variety of skills is important on a SRE team, but not for a single person. Nobody can possily know everything. A bunch of answers on backgrounds were non-technical altogether–music performance, theology, english literature were among the answers. The profession skews highly experienced - mid-career+, fairly flat starting from 6-10 years to over 20 years.

What metrics are used to determine success: number of incidents, MTTR, MTBF, deploy frequency; revenue is #5 at 30%. But a lot of people said there are no metrics or the metrics are bad. The survey confirmed the desired 50/50 split of writing code vs fixing issues / tending systems. Monitoring tools were used less than expected, but some survey answers considered these alerting tools and not monitoring tools.

Top 5 skills: automation; logging/monitoring/observability; infra config; scripting languages; app/net protocols. Top 5 non-tech skills: problem solving; teamwork; cool under pressure; communication (written, verbal).

Kernel Upgrades at Facebook—Pradeep Nayak Udupi Kadbet

Regular kernel upgrades fix both security bugs and functionality bugs. They also provide new features (better metrics, newer firmware) and improved performance.

Facebook built an automation system to facilitate a rollout of a newer kernel, but it is not automated continious upgrade – the decision to upgrade (or rollback) is left to humans. The automation converges to the kernel version specified by the service owner and allows multiple different versions to exist across the infrastructure at the same time.

For each machine, the automation follows a finite state machine approach:

- liveness check of a machine

- drain (e.g. MySQL - promote another machine to master; service - drain all clients; disable monitoring etc.)

- upgrade the kernel

- reboot

- undrain (restart services, rejoin the pool, reenable monitoring…)

- done

There are various concurrency contraints to specify parallelism of the upgrade and to serialize a rollout based on tags (datacenters) or arbitrary Python functions. There are visualizations of totals by version and environment, and running rate of upgrades performed by the system.

Sustainability Starts Early: Creating a Great Ops Internship—Fatema Boxwala

Why should we do SRE internships, especially given Dawn Parzych’s survey finding that the field skews towards senior practitioners? It’s a future talent pool, introduction to the Ops/SRE field that is not generally taught in schools, technology experience, and making measurable contributions.

Empathy for interns: they work short-term, but full-time. Often interns also do school work while interning and move to live in a different city (or country!) for a few months. They get limited benefits, and can have zero previous work experience.

When onboarding a new intern, talk about: projects/experience; expectations on both sides; resources & information; logistics of the job. Check in frequently and provide multiple contacts (HR, manager in case the buddy is not available…) Provide and solicit feedback.

A good intern project should involve multiple teams, for more exposure to people and functions within the company.

SoundCloud’s Story of Seeking Sustainable SRE—Björn Rabenstein

How SoundCloud does SRE. They have high user to engineer density: 100m users, 100 engineers. More services than engineers. Compared to Google’s 1B users, 10,000 engineers, and more engineers than services.

There are no separate DevOps teams nor SRE roles. Engineers hold the pagers–“SRE in spirit”:

- automate operations

- right sized rotations

- monitoring and alerting

- actionable pages with runbooks

- ops work <50%

- effective self regulation of feature work vs. stability

- error budgets are nice but full implementation not currently a priority

Implementing error budgets requires all of: monitoring, mgmt support, “nuclear option - return the pager” (SRE at Google can decide to stop maintaining a service). Monitoring is fundamental in general, see hierarchy of service reliability (SRE book page 104).

Minimal size of a round-the-clock, 7 days a week on-call rotation is 6 (follow-the-sun) or 8 if colocated; minimal size of dedicated SRE team is therefore also 8.

Dealing with Dark Debt: Lessons Learnt at Goldman Sachs—Vanessa Yiu

Goldman Sachs is a 38,000 employees company, about a third of which are engineers. Their SRE team’s goal is to “manage system risks and complexity at enterprise scale”. The system discussed in the talk is SecDB, a risk management platform implemented using an object oriented database.

Dark debt is defined as “arising from unforeseen interactions of hardware or software with other parts of framework” and “not recognizable at the time of creation”.

I wrote down three tips:

- Controlled experiments / chaos tooling are great. They detect issues at a point in time, but systems are not static, so chaos experiments should be running continuously.

- Visualising issues in distributed systems helps. To that end, GS uses tracing/Zipkin.

- Fostering right culture and practices: avoiding whack-a-mole troubleshooting, more runtime visibility, pair programming, hackatons and dedicated refactoring sprints to deal with things “on the back burner”.

Max’s comment: when dealing with debt of any kind is left to dedicated events (hackathons, refactoring sprints, documentation days), I would argue that is a failure of prioritization rather than a solution. Dealing with debt and maintaining systems in state of good repair should be part of the day-to-day, regular work stream.

Can I Tell You a Secret? I See Dead Systems—Avishai Ish-Shalom

The talk is best described in its description: raising awareness for the end-of-life phase of software products.

Legacy is all around us: 8086 to 286 real-to-protected mode transition, still done today in the Linux kernel. The terminal window traces lineage to a 1869 stock ticker. RISC microcode in x86 CISC architecture, since Pentium Pro. SMS, a hack from the 80s, now used as authentication. How did this all happen? “This works. Don’t ever touch it.”

Infrastructure modification problems: physical, common dependencies, logistical nightmares. Modifying software is easier than changing hardware. Protocols are also hard to replace and not always designed for compatibility (see TLS 1.3 implementation issues).

Deprecation is usually not planned for, and 3rd parties can refuse to participate in the deprecation effort.

We should be building deprecatable, or at least upgradable, systems:

- have deprecation plans

- design extensible protocols

- allow users/customers to export data

- build in ability to upgrade or replace

- keep maintaining an active system

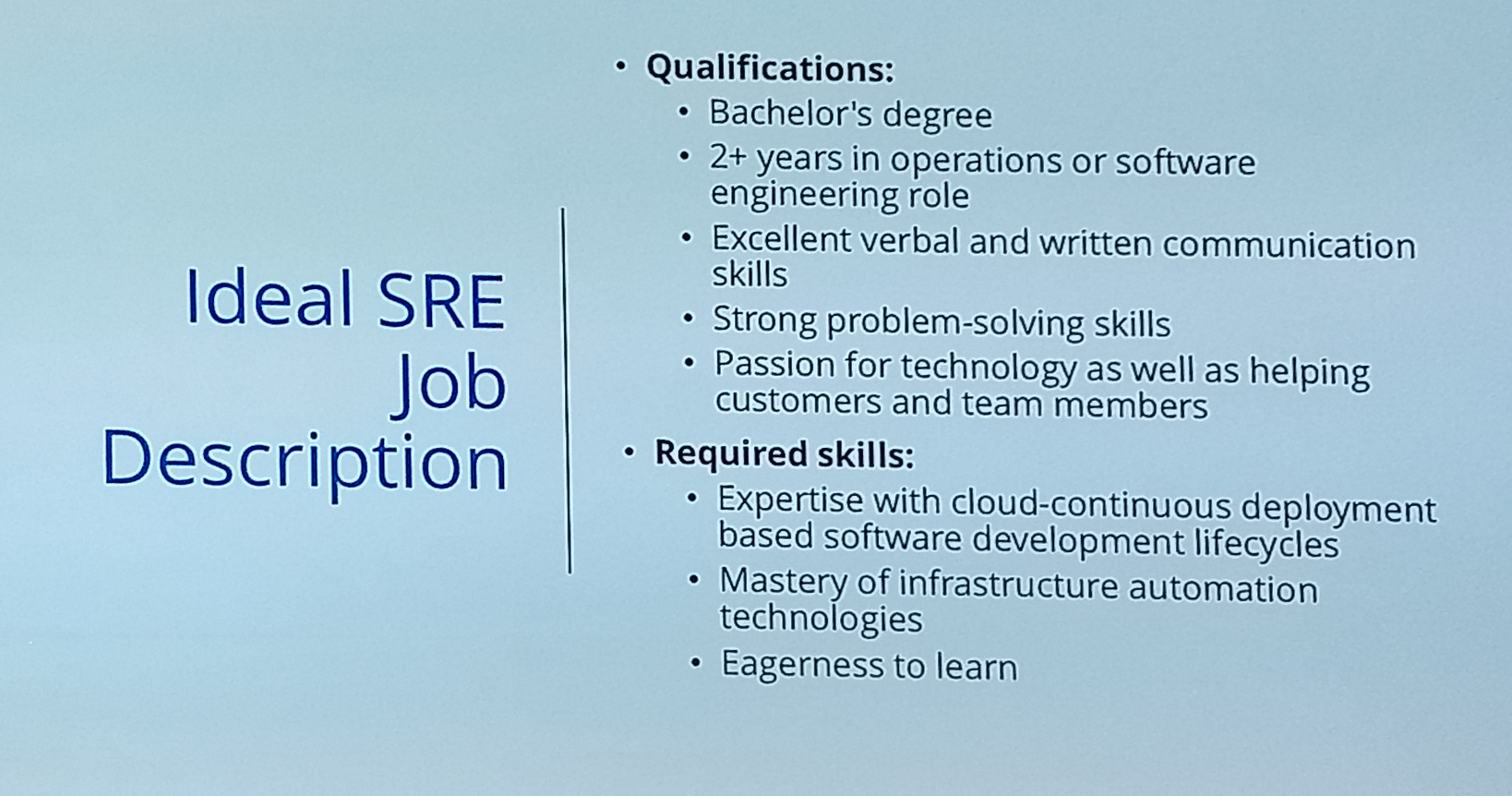

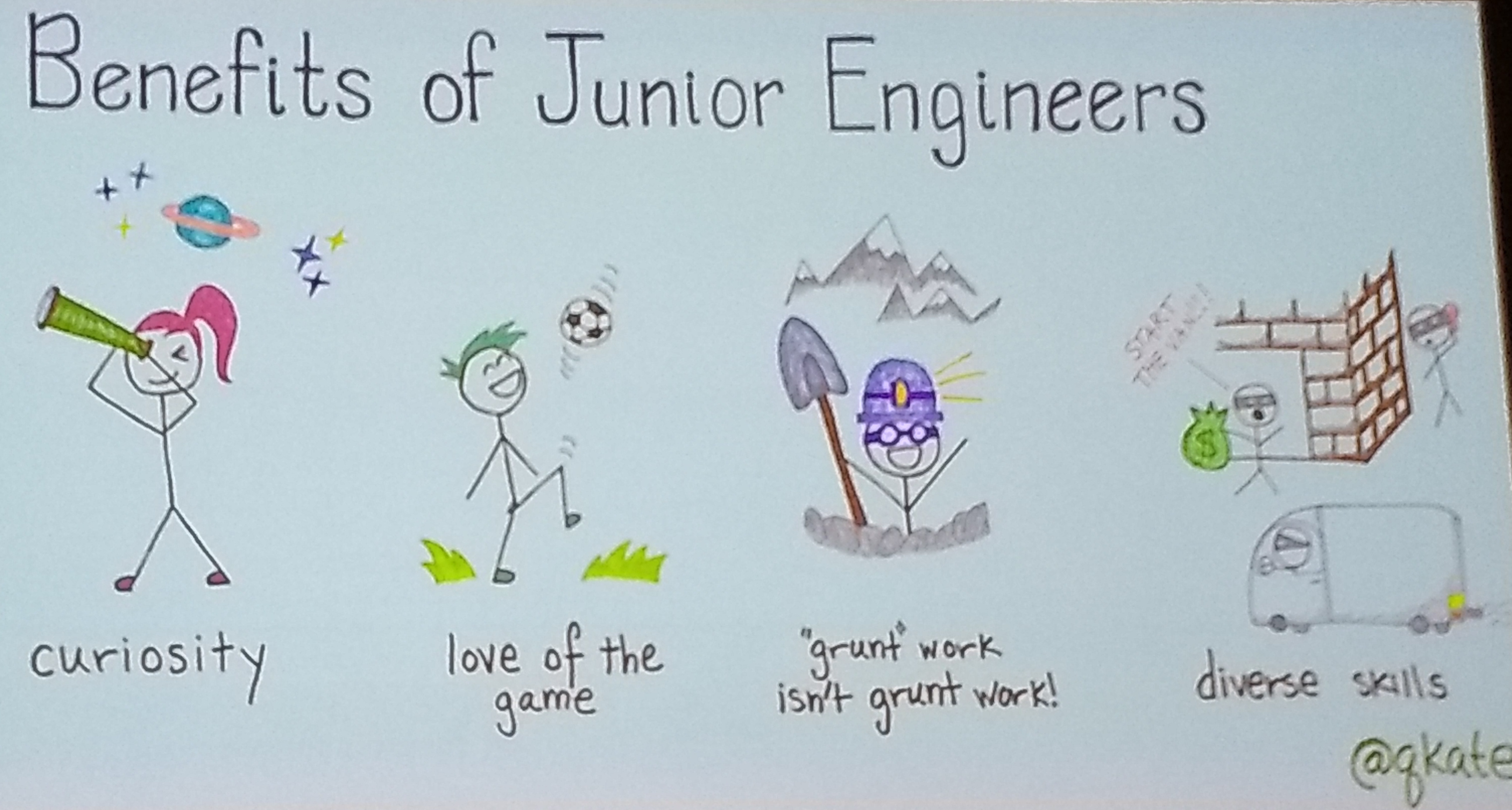

Junior Engineers are Features, not Bugs—Kate Taggart

Junior engineers are real engineers - use the term carefully. They come from multiple sources that are not just CS programs. They have a lot of advantages: curiosity, new perspective, etc. Sometimes junior engineers come with superpowers, like knowledge of formal incident response processes in one example. This often comes from unusual backgrounds and career changers.

A few common myths used to rationalize not having junior engineers are:

- our architecture is too complicated (you won’t remember it at 3am either);

- our deploy process is 18 steps and too intricate (automate? simplify?);

- some operations are too dangerous to delegate (have a tooling layer in between to mitigate).

There are several legitimate antipatterns for adding junior engineers to the mix: if extreme dev velocity is required; if there are no opportunities to support growth; if roles and product definition are very fluid. In these cases, a senior engineer will do better.

Good patterns:

- make time for learning;

- reward mentorship (in ways that are not lip service);

- check in often with junior engineers and set clear expectations;

- onboarding buddy village: instead of one buddy for all purposes, have a per-tech specialization - spreads the load around.

How do we help cram both Ops knowledge and system knowledge into a new engineer? Conferences can be useful. Reading public postmortems. Be deliberate about finding information dense opportunities to learn.

“Hire for potential”: important, but a lot of unconscious bias can creep in when doing this, so be careful. Qualities to seek: scrappiness, self reflection, and team mindset.

Keep Building Fresh: Shopify’s Journey to Kubernetes—Niko Kurtti

Shopify’s environment for 3000 engineers is Chef+Docker and Chef standalone in an AWS PCI environment. Heroku is also used and can run quite a bit of traffic without much operational involvement at all. The talk, as the title suggests, is about their implementation of Kubernetes.

Among the design goals:

- some things that don’t scale

- manual processes

- slow processes, making people wait

- rusty knobs that are not working when needed

- wobbly things that don’t work first time, every time

- things that do scale

- tested infra

- automation

- safe self-serve capabilities

- training people to have system expertise

- 3 core principles

- works every time (they call it “paved road”)

- hide complexity

- self-serve

and reasons for building their own platform:

- hit most (80%) use cases

- create common patterns and hide complexity, but don’t restrict it in the future

- better tooling

- multicloud

- service mesh

Kubernetes was chosen because it has the best traction, is extendable, and is available as a service (GCP/AWS). But k8s is only the foundation; the house comprises: how to specify the runtime, how to build, how to deploy, how to set up dependencies. The surrounding tooling evolved from a service directory (services database) to a more extensive tool (groundcontrol). By default, services are put into the lowest tier, and require the creator to provide an expiration date. Extending k8s is fairly easy: Go client is high quality, having distributed systems primitives helps a lot.

Other tools:

- kubernetes-deploy, a wrapper around the k8s CLI. Pass/fail status, pre-deploy stage for configmaps and secrets, pluggable.

- cloudbuddies: small helpers that deal with DNS records, SSL certs, create various AWS resources, set user editable roles, security rules, reset bad nodes…

- one example is NameBuddy: looks at ingress objects, CRUDs DNS records to match.

- Cloud portal: a web user interface, namespaced (devs can only see their services) and allowing common operations (ssh in, restart, etc.)

Q: frameworks now exist for building custom k8s controllers, use any of them? A: not existed in 2016, looking at them now.

The Math behind Project Scheduling, Bug Tracking, and Triage—Avery Pennarun

Avery spoke about a number of data and insights collected over years of tracking large software projects. How large? For example, 1300 bugs over 6 years (1100 fixed). The most interesting one to me was about the pace of bug fixing: it is roughly constant. If organizational changes are made, the slope may change but the bug fixing rate is still a straight line. Within a few weeks following a re-org, a prediction in bug fixing speed becomes very accurate. This also implies that a bug bankruptcy does not work, in the sense that it does not fix long-term bug fixing capacity issues (if the slope was unsustanable before, it will continue being unsustainable.)

Various examples of changing project goals / scope were presented and discussed. Based on their burn-down charts, it is much better to change goals / explore early than to do late changes or to constantly make significant changes in direction. Avery is not a fan of goal-setting, milestones (work expands to fill the time to the milestone), and project planning tools; simple sorting by sequence (dependencies) and then size (small to large) results in best outcome.

Stories are not bugs; stories are few, big and controversial, bugs are quick, numerous, and boring.

See also: apenwarr epic treatise

Migrating Your Old Server Products to Be Stateless Cloud Services—Kurt Scherer, Craig Knott

Atlassian used HAProxy as a shared proxy for all services, configuration changes by teams impacted others’ services. Followed by NGINX, app, and the database. All the usual set of problems (manually managed, etc.)

Moved to AWS. Chose Terraform for orchestration, so the rest of Hashicorp stack was adopted too (Packer). Used a monolithic Puppet repository before, but switched to Ansible - SREs preferred it. The new stack is LB → autoscaling group → RDS Postgres or EFS.

More services meant adding more monitoring, and not linearly. Atlassian wanted the monitoring effort per service to go down. Turns out Terraform works with Datadog, used it to great success.

Migrations: preconfigure a new stack; migrate data over; cut over DNS, or abort.

Building a Debuggable Go Server—Keeley Erhardt

Improbable (the company) implement their services in gRPC with protobufs and define a service as a collection of actions the server can do on request. They built a framework for Go servers called “radicle” but I couldn’t find any source code except this demo. The framework treats services as plugins and provides common functionality in the base.

What makes a service production ready?

- metrics

- logging

- tracing

- rate limiting

- auth, retry policy, panic recovery, debug endpoints…

Other options: Go kit and its layering strategy (separate operational/endpoint metrics from business logic metrics), Micro. Decided to go custom because that was before some of these frameworks existed; and wanted more flexibility.

Audience question: differences from a service mesh? Answer: not as much functionality, done in a different way.

Halt and Don’t Catch Fire—Effie Mouzeli

“Our systems will never be perfect, just manageable”

Effie’s talk appeared to be from a viewpoint of a manager / tech lead joining a team that has existing tech debt in place, and containing and managing it. There are 5 stages of dealing with existing technical debt: denial, anger, bargaining, depression, acceptance.

Handling debt is a loop, not a workflow to run through once. Once one iteration ends, go back to the beginning, do it again.

- understand how we got here

- ask customer questions, look at the backlog

- document problems and have a mental picture / dashboard of the work ahead

- prioritize

- keep your team alive: debt can kill a team by burnout, blame, tension/toxic behavior, heroes and messiah behaviors

- build a sustainable culture for going forward

Scalable Coding-Find The Error—Igor Ebner de Carvalho

A talk about the importance of and some common issues found in code reviews. One of the reasons for code reviews is to fix/find pain generators: decisions that will not scale with growing usage. Experience helps: to identify patterns, we need to have either experienced them before, or to know what to look for.

Several specific tips and gotchas:

- regular expressions, careful writing of

- exceptions vs. error codes

- atomicity - locks, idempotent calls

- costs of frameworks and libraries

- at scale, every method will break at any execution point

Lightning Talks

Will Nowak talked about incident management simulation using non-technical tools. Background team work was represented by coloring activities. People not available to respond to an incident were tasked with playing Go Fish. Having to look at and understand unfamiliar dashboards was simulated by counting beads as different resources. And fixing a problem (“creating extra capacity for a service”) was simulated with building a LEGO model (“about 100 pieces are just right, takes about ten minutes and requires full concentration”). This seemed like a very fun and imaginative way to prepare a team to handle a real incident.

- Facebook has a “hack month” where one can go to work on a different team, which resulted in a nicely illusrated refactoring story by Daisy Galvan.

- A tip for pre-positioning jobs on Kubernetes clusters with poor connectivity (points of presence caches) - run the same job but replace the entrypoint with “sleep for 1 week” by Kyle Lexmond.

- Various cluster management housekeeping tasks can impact service level objectives. Stephan Erb described Aurora/Mesos plugins to drain and update nodes only when the downtime would be acceptable for services’ availability guarantees.

- Effie Mouzeli spoke about some fears in the interviewing process from both the interviewer and interviewee side. It was interesting that a candidate deciding to stay at their current job was classified as “annoying habit” and not as “the offer or the job were not compelling enough”.

- Jorge Cardoso showed some academic-looking slides applying a neural network to the problem of detecting significant changes in distributed traces.

- When the selling point of a new search engine is all the profits go to reforestation, it makes sense that business metrics (including errors) can be expressed in trees per second, as Jason Gwartz demonstrated.

Building Blocks of Distributed Systems Workshop—John Looney

On the morning of Day 3, I thought that the most interesting session was this workshop, and sat in for the first hour and a half. The workshop did a great overview of the most common building blocks of large-scale software, with opinions on many of them, while not shying away from underlying theory and algorithms.

Pipeline and batch systems are used to process huge data sets. To design a system like that, one needs to describe the system inputs and the nature of the processing, as well as the following details:

- how is the data stored

- how does the data get in

- is the data broken into parts, and how

- how would the processors synchronize among themselves

- when do we know that the work is done.

The big architectural pieces are orchestration (including finding work, ordering, and sharding); configuration and consensus; and storage. Some of the commonly heard technology blocks implementing the various pieces are Terraform; Zookeeper/etcd; Kafka/PubSub/SQS; Spark and Storm; DNS and Consul; and Mesos, Kubernetes, and ECS.

The “old school” way of doing batch systems is MapReduce: (NFS as a data store, single master coordinating fleets of mappers and reducers). Add some coordinators to do health checks on the fleets, and a lock server (get a lock and health check it or you lose it). If that’s done, coordinators don’t need to do health checks themselves anymore.

The next big redundancy win is failovers between masters. This can be outsourced to a lock server: a combination of write lock to promote to master, and read lock to observe for an existing master failing. The same idea can be used by workers too, for example to verify they can proceed with an assigned exclusive task.

Lockserver opinions:

- ZooKeeper: old, solid, complex

- npm lockserver.js

- Redis in a cluster

- etcd (the new hotness?)

- Consul

- Database as a lock server: lock a single row

Consensus defined:

- termination: eventually decide, unless faulty

- agreement: decided value is the same

- validity: decided value has actually been proposed by one of the peers

Challenges to achieving consensus:

- broken, or slow, or message drops?

- not always possible to achieve consensus (FLP paper)

Raft, a consensus algorithm that’s easier than Paxos to implement. Has a tunable heartbeat interval; too-fast heartbeats are too sensitive to network glitches, 100ms proposed. Paxos is another famous algorithm that is essentially Raft, but where every single log operation is an election. Slower, but true multi-master.

Work queues: items that can be leased by a worker, deleted when complete. This description is the same as lockserver description, which is the same as a replicated state machine (which describes any consensus algorithm). Unordered queue: don’t submit results if completed after timeout; “at least once”. Simpler, but duplication is someone else’s problem.

Scaling challenges of work queues: batching - increases latency and retries. sharding - uneven load. pipelining - more resources to track, more processing time jitter.

Ordered queues: “pain and suffering”. No sense to use ordered queues when there are multiple producers. Can’t be internally sharded without locking. ACID database table is the best implementation for an ordered queue.

PubSub and SQS are both queues. SQS is one-queue-per-API-call. SQS is pull, PubSub is push/pull. PubSub roughly equals SNS+SQS+Kinesis. SQS has FIFO but performance is limited. Items will clean up after a particular timeout (7-14 days); queues are intended to be a temporary data structure.

Kafka, LogDevice: streaming logs, not queues. Can be fully persistent and emulate SQS/PubSub APIs.

CAP: “sequentual consistency” vs “highly available”. See https://www.infoq.com/articles/cap-twelve-years-later-how-the-rules-have-changed for details (it’s not “2 out of 3”).

ACID databases BASE databases: mostly available; soft state - cannot snapshot; eventually consistent. B-Trees (Oracle, MySQL, Postgres, ntfs; small reads, updates in place, insertions, indexes help a lot, OLTP) vs. Log Structure Merging (BigTable, Cassandra, HBase, Lucene, myRocksDB; better for full scans; storage is just logs; heavy write workloads are easy; occasional compaction processes).

Isolation of transactions, weak vs strong: Strong is literally serialized execution. Weak: no dirty read/write, but snapshots and atomic writes are possible. Explicit locks and conflict resolution methods. Strong: more complex locks. Snapshot isolation and merging with aborts and rewinds / fallback to serial execution when a conflict is detected.

Data loss: disk/machine/switch/cluster loss; bugs; security issues. Avoiding data loss with: replication, availability zones, backups (+offsite), checksumming in the background, fixing correctable errors.

Data store formats: columnar vs. row (columns efficient in storage but CPU intensive to search); document vs. cell based (fixed, enforced schema vs. complex schema reconstructed on read); relational/nosql/graphs (great for many:many; hard to scale / great for parent-child records; bad at pulling out a single field / vertices and edges, easy to optimize queries). Examples: Riak, Cassandra, Spanner, Dynamo/neo4j, Oracle, SAP hana.

Other notes

Several sponsors made various quizzes available, mostly for recruitment purposes. I thought these quizzes had too many of my least favorite type of interview questions: edge cases of commands’ behaviors and specific technology or tool knowledge. It is okay to present those as fun puzzles or to learn/discover something new, but when the context was hiring, this did not leave a good taste.

The vendor area was very crowded, noisy, and hot. I’ve noticed a point of “peek discomfort” around the 500-2000 attendees mark: smaller events won’t have any problems with traffic; larger events typically choose venues well equipped to handle large crowds (conference centers). The middle is usually hosted by hotels and many hotels’ air conditioning systems appear to be designed for providing comfort to crowds in the ballroom / conference rooms only.

I don’t know if it’s more of a SREcon thing or EMEA thing, but most people in the audience represented companies with 1k+ developers. Very few hands (5 out of 300?) from companies having fewer than 20 engineers. It was not that expensive a conference, comparatively.

There are (or, rather, will be) three O’Reilly SRE books:

A Birds of a Feather on training SREs referred to https://github.com/dastergon/awesome-sre.

Postmortem language tip: “what did you think was happening” vs. “why did you do that”.

Many conference clickers only go forward/back. Don’t design slides that require 4-way navigation.